For example, running DistCp with the destination as an S3 bucket instead of HDFS is just a matter of passing a URL that points to an S3 bucket when you launch the DistCp command. Since applications are coded against the abstract FileSystem instead of tightly coupled to HDFS, this gives them flexibility to easily retarget their workloads to different file systems. Alternative implementations are possible though, such as file systems backed by S3 or Azure Storage.

The most familiar implementation of the FileSystem API is the DistributedFileSystem class, which is the client side of HDFS. Instead, these application are written to use the abstract FileSystem API. Here is a link to the JavaDocs:Īpplications that we traditionally think of as running on HDFS, like MapReduce, are not in fact tightly coupled to HDFS code.

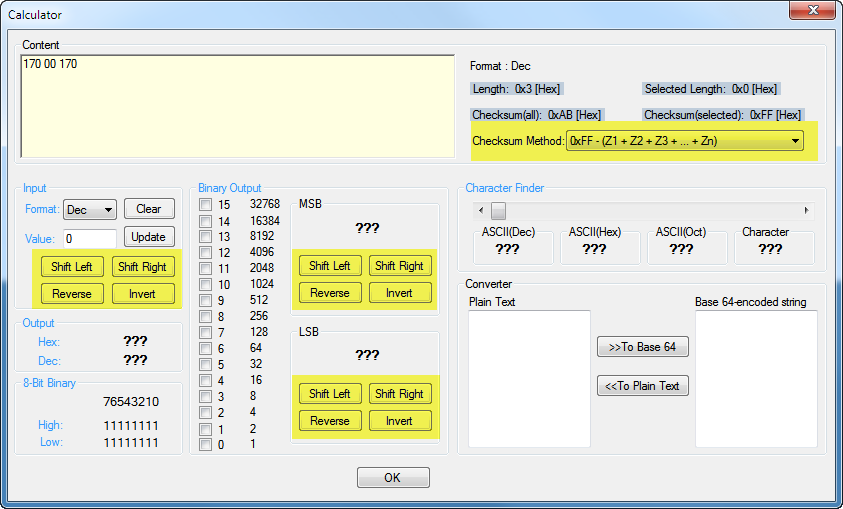

#CAT CHECKSUM CALCULATOR CODE#

This abstract concept is represented in the code by an abstract class named FileSystem. Hadoop contains not only HDFS, but also a broader abstract concept of "file system". It may not be clear that terminology like "LocalFileSystem" and "ChecksumFileSystem" is in fact referring to Java class names in the Hadoop codebase.

How does this FileSystem differs from HDFS in terms of Checksum ? ChecksumsĪre verified when the file is read, and if an error is detected, LocalFileSystem throws crc file, so the file canīe read back correctly even if the setting for the chunk size has changed. The chunk size is stored as metadata in the. The chunk size is controlled by the tes-per-checksum property, whichĭefaults to 512 bytes. Where is this filestystem client at the client layer or at the hdfs layer filename.crc, in the same directory containing the checksums for each chunk of the When you write a file called filename, the filesystem client transparently creates a hiddenįile. So if im correct, without this filesystem earlier client used to calculate the checksum for each chunk of data and pass it to datanodes for storage? correct me if my understanding is wrong. The Hadoop LocalFileSystem performs client-side checksumming. Why hadoop has multiple filesystems, i thought it has only HDFS apart from local machines filesystem.Ĭan someone explain me this statement, very confusing to me right now.ġ. Hello Everyone, Kindly help me on these queries ( Reference book O'reilly )įirst of all Im not sure abt this word "LocalFileSystem" ,Ī) Does this mean it is machines file system on which hadoop is installed example : ext2, ext3, NTFS etc.ī) Also there are CheckSumFileSystem etccc.

0 kommentar(er)

0 kommentar(er)